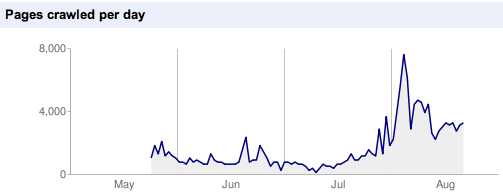

I love Google – they send me stacks of traffic and make sites like Booko reach a far greater audience than I could effect on my own. Recently, however, Google’s taken a bigger interest in Booko than usual. These kinds of numbers are no problem in general – the webserver and database are easily capable of handling the load.

The problem for Booko, when Google comes calling, is that they request pages for specific books such as:

http://www.booko.com.au/books/isbn/9780140232929

When this request comes in, Booko will check to see how old the prices are – if they’re more than 24 hours old, Booko will attempt to update the prices. Booko used to load the prices into the browser via AJAX – so, as far as I can tell, Google wasn’t even seeing the prices. Further, Booko has a queuing system in place for requests to look up prices, so when Google requests pages, this adds a book to the queue of books to be looked up. Google views books faster than Booko can grab the prices, so we end up with 100’s of books scheduled for lookup, frustrating normal Booko users who see the problem as a page full of spinning wheels – wondering why Booko isn’t giving them prices. Meanwhile, the price grabbers are hammering through hundreds of requests from Google, in turn, hammering all the sites Booko indexes. So, what to do?

Well, the first thing I did was drop Google’s traffic. I hate the idea of doing this – but really Booko needs to be available to people to use and being indexed by Google won’t help if you can’t actually use it. So to the iptables command we go:

iptables -I INPUT -s 66.249.71.108 -j DROP iptables -I INPUT -s 66.249.71.130 -j DROP

These commands will drop all Google traffic.

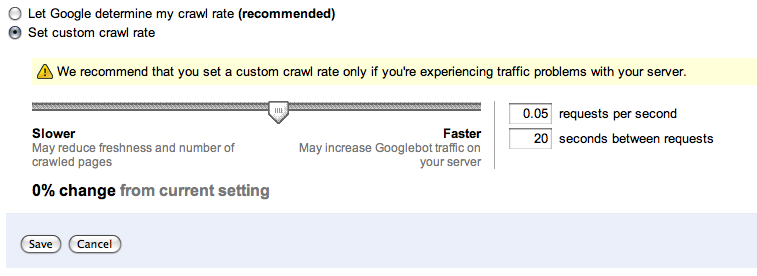

The next step was to go to sign up for Google Webmaster Tools and reduce the page crawl rate.

Once I’d dialled back Google’s crawl rate, I dropped the iptables rules:

iptables -F

To make Booko more Google friendly, the first code change was to have book pages rendered immediately with the available pricing (provided it’s complete) and have updates to that pricing delivered via AJAX. Google now gets to see the entire page and should (hopefully) provide better indexing.

The second change was to create a second queue for price updates – the bulk queue. The price grabbers will first check for regular price update requests – meaning people will get their prices first. Requests by bulk users, such as Google, Yahoo & Bing, will be added to the bulk queue and looked up when there are no normal requests. In addition, I can restrict the number of price grabbers which will service the bulk queue.

This work has now opened up a new idea I’ve been thinking about – pre-emptively grab the prices of the previous day or week’s most popular titles. The idea would be to add these popular titles to the bulk queue during the quiet time between 03:00 and 06:00. That would mean that when people viewed the title later that day, they’d be fresh.

I’ve just pushed these changes into the Booko site and with some luck, Google & Co will be happier, Booko users will be happier and I should be able to build new features with this ground work laid. Nice for a Sunday evening’s work.